Tuesday, February 6, 2018

Remote VPN for Microsoft employees

https://microsoft.sharepoint.com/sites/itweb/remote.

It has even a shorter link: http://aka.ms/remote

Hopefully next time I'll remember to search my blog...

Sunday, April 9, 2017

Windows Server Backup - Delete old backup

- wbadmin get disks - lists the disk in system and the space used by the backups

- wbadmin get versions - lists the backup versions

- wbadmin delete backup -keepVersions:10 - deletes older backups, keep the last 10 backups

- wbadmin delete backup -version:04/08/2017-10:16 - deletes the specified backup version

Sunday, November 27, 2016

Cookie limits - browser side

Here is the result: http://alinconstantin.com/download/browsercookies/cookies.html

- JavaScript support for cookies sucks, it's a weird mechanism. Setting a cookie is done by setting a property, document.cookie, but reading back that property returns all cookies set (just names and values, even though cookies set could have other properties like path or expiration dates)

- If you set cookies from JavaScript, don't forget to remember the names of cookies set in a separate list! If you set cookies with values that go over the browser limit per domain, browsers like IE/Edge will clear up the property document.cookie, and you'd have no way to enumerate existing cookies (to know what to delete before being able to set new cookies). Fwiw, this behavior is browser dependent, Chrome/FireFox will drop oldest cookies instead...

- IE support for JavaScript sucks. In IE11, string.startsWith(), string.repeat() are not implemented, delegates var f1 = () => {dosomething();} are not understood, etc. Edge is better in this regard.

- Chrome is silly, not allowing using cookies when scripts are run from file:// locations.

- IE & Edge have very low limits for total cookies size. You probably don't want to send 10k cookies with every http request, but I've seen websites hitting these limits... 15k would have been more reasonable (and closer to the 16k default limit of header sizes in server side). On the plus side, 5k per cookie is better than the ~4k of all other browsers (and I've seen websites hitting that limit in other browser, too)(

| Browser | Max bytes/cookie | Max cookies | Max total bytes for cookies |

| IE11 & Edge | 5117 | 50 | 2*5117 = 10234 |

| Chrome 54 | 4096 | 180 | 180*4096 = 737280 |

| Firefox r50 | 4097 | 150 | 150*4097 = 614550 |

| Opera 41 | 4096 | 180 | 180*4096 = 737280 |

Thursday, November 24, 2016

Cookie limits - the server side story

RFC2965 will tell you a browser should support at least 20 cookies of size 4096 bytes per cookie, but browsers usually support higher limits. E.g. Chrome supports 180 cookies of size 4096 bytes, per domain, with no limits for the total size of all cookies. That makes 720Kb of data that is allowed by Chrome in each request.

In reality, even if you insist of sending that crazy big amount of data with every http request, you'll discover it's impossible to use that many cookies. Depending on the server accessed, you may be able to use only max 3 cookies of size 4096 bytes! Why? Because there is another side of the story - the servers you are accessing will also limit your use of cookies sizes.

Those limits depends from http server to server, and the server response if you make larger requests varies, too. Here are some examples:

- www.microsoft.com - throws SocketException / ConnectionForcefullyClosedByRemoteServer after ~16k max cookies

- portal.office.com - Starts returning "400 Bad Request – Request Too Long. HTTP Error 400. The size of the request headers is too long" after max ~15k cookies

www.google.com - Starts returning 413 Request Entity Too Large after ~15k cookies - www.amazon.com - Starts returning 400 Bad Request after ~7.5k

- www.yahoo.com - Accepts requests up to ~65k, after that returns 400 Bad Request

- www.facebook.com - Accepts about ~80k after that starts returning 400, 502 or throws WebException/MessageLengthLimitExceeded (seems dependent on the number of cookies, too)

If you're writing a web application and use cookies pushing the limits, it's important to know what your server will tolerate on incoming requests.

I wrote an app one can use to test and get an idea of the server limits. You can download it from

http://alinconstantin.com/Download/ServerCookieLimits.zip and invoke it with the http:// Uri of the server to test for parameter. The app makes requests to the server with cookies of various decreasing sizes, trying to narrow down the accepted max cookies size. The output looks like in the picture below.

Saturday, September 5, 2015

Useless router WiFi speeds and maximum Surface Pro 3 Wifi speed

My WiFi router is a Netgear Nighthawk R8000, which boasts a 3.2Gbps WiFi speed. That's just for PR, in reality, it has one 2.4GHz band with max 600Mbps and two 5GHz channels, each supporting max 1300Mbps. So, the max speed of connection is limited to the max speed of the band I'm using. But, that's not the end of the story - both my Surface Pro 3 and my wife's laptop connect at maximum 866.5Mbps, and that's when staying 2-3 fests apart from the router. The speed is actually negotiated between the router and the client device. If I move 10 feet away, the speed starts dropping to 700Mbps. If I stay in living room, the speed drops to 80-90Mbps.

Surface 3 Pro has a 'Marvell AVASTAR Wireless-AC Network Controller' Wi-Fi adapter, and based on http://www.marvell.com/wireless/avastar/88W8897 it's maximum WiFi speed is 867Mbps.

I'm reaching this speed (so Microsoft kept its promise and fixed the low speed problem), but I have to be feet apart from the router to reach it. And even in these ideal conditions I'd need at least 4 Surfaces to saturate the two 5GHz channels plus more WiFi devices connecting on 2.4GHz to reach the advertised router's speed... The router speeds are just a PR gimmick.

Sunday, November 2, 2014

Digital Photo Professional cannot edit CR2 RAW files

Today I spent almost one hour trying to figure out why Canon DPP was not able to edit some pictures I took a while ago. All the pictures in one folder were shown like this:

Notice the glyph on top of each image indicating editing was not allowed.

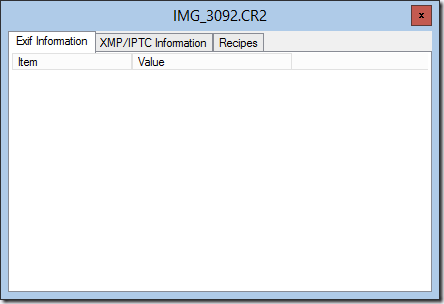

I searched 3 times the menus for some option to unblock editing, but there was none. I thought of files being read-only on disk, having wrong ACLs. Nothing. Some website suggested for images being edit-protected from the camera to access an unblock option from the Info window, but that was completely empty instead of displaying EXIF info.

It was only happening with images in one folder, so I moved an image out of that folder, but nothing changed.

Hours later I viewed the images in Explorer from a different computer, and then I noticed something odd – why was the CR2 size so small as compared with other pictures? Were they corrupted?

And then it hit me – when I took those pictures the camera battery run out on my 5D III and I had to use my old camera, a Canon 20D. And DPP was not able to open the files from this older camera…

After a little digging on the net, I had the confirmation: Canon has released Digital Photo Professional 4.0, but only for 64-bit computers and only for certain cameras like Canon 5D Mark III. Older camera like Canon 20D are not supported by DPP 4, and instead I had to download the previous version, DPP 3.14 to edit the raw files. It turns out that even new cameras from Canon like 7D mark II are not supported by DPP 4.0, on either 32 or 64-bit Windows. Hopefully Canon will reconsider and add compatibility support for all the cameras when they release a new version of DPP 4…

Thursday, September 18, 2014

How to install Active Directory (AD) tools on Windows 8

This article describes in great details the steps

http://www.technipages.com/windows-8-install-active-directory-users-and-computers